Using time series analysis (Part 1)

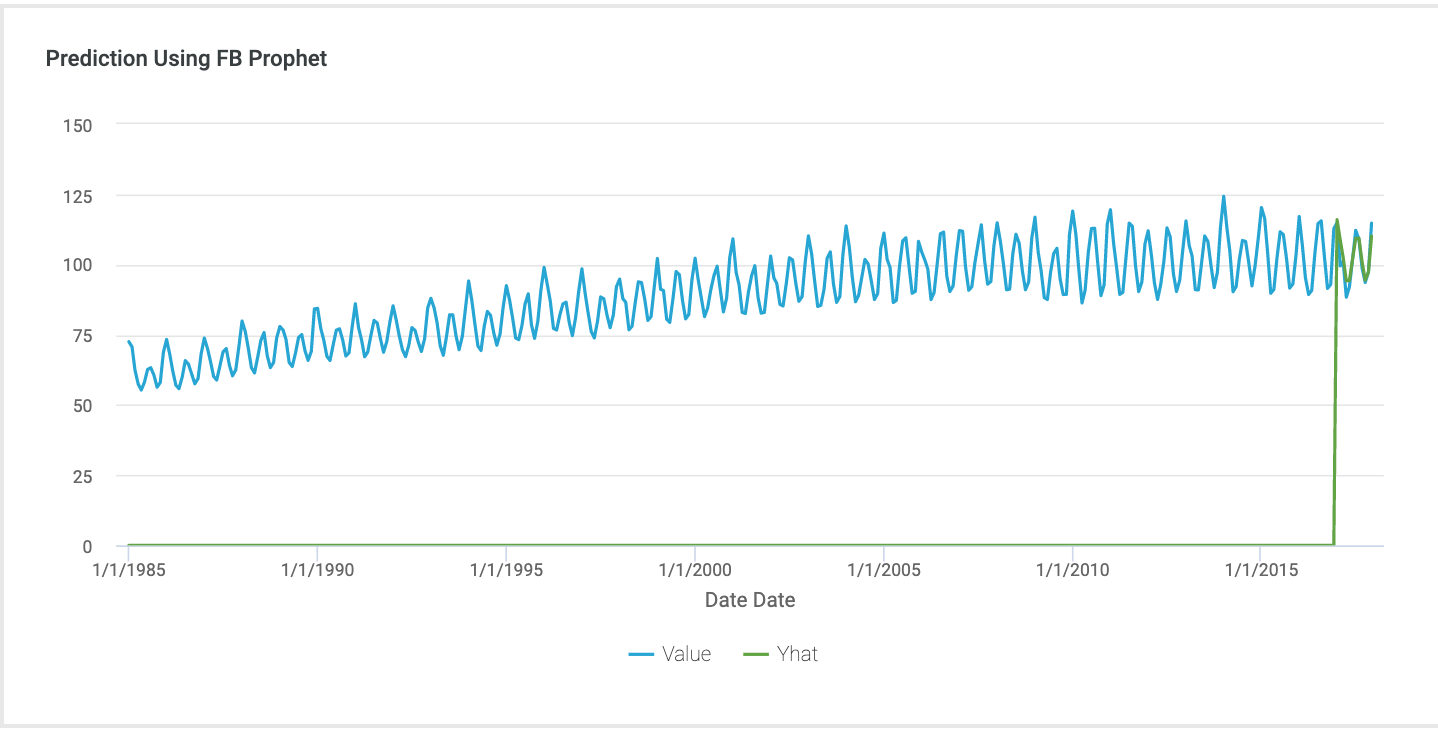

I got the dataset from Kaggle for practicing time series analysis.

https://www.kaggle.com/felixzhao/productdemandforecasting

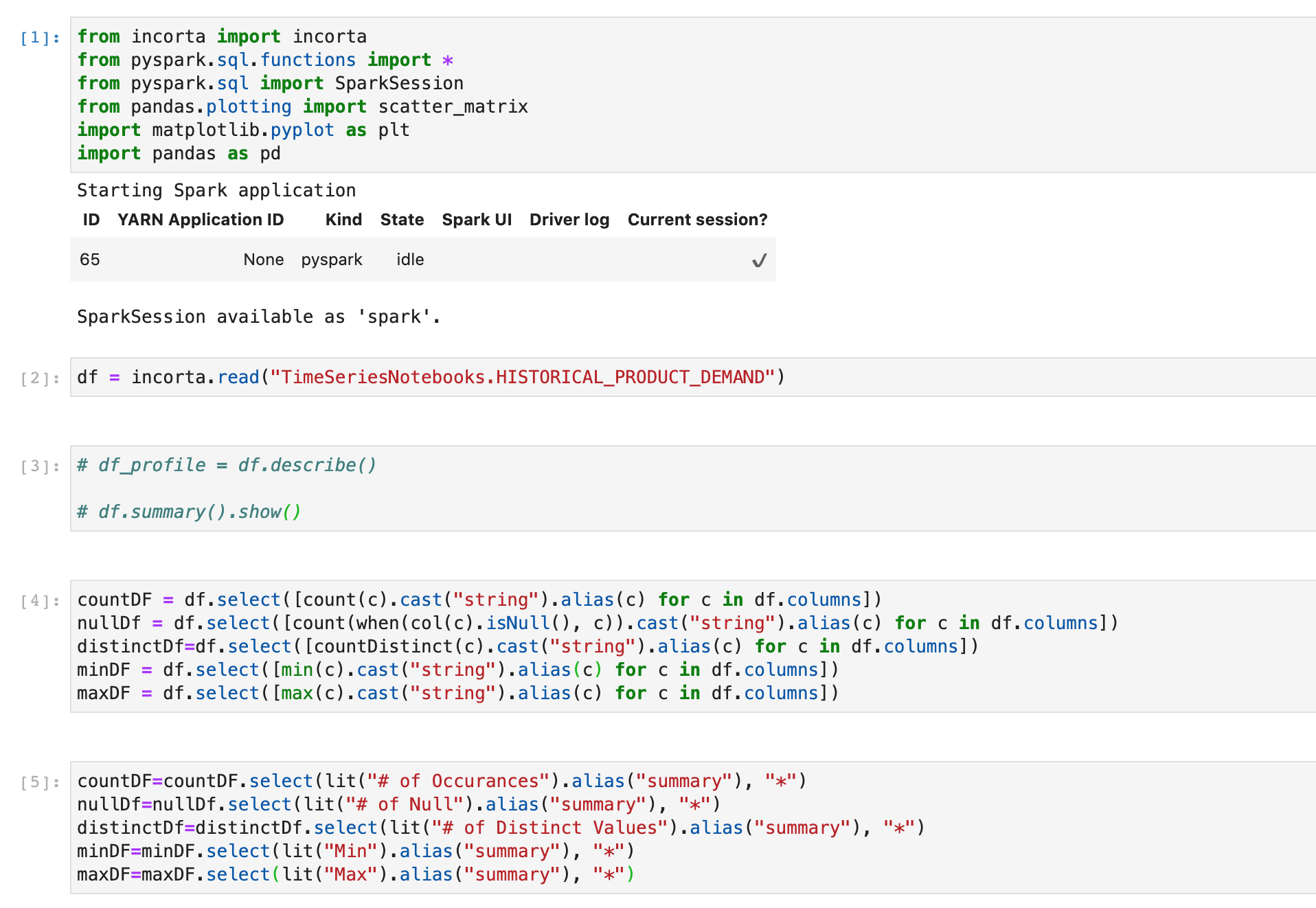

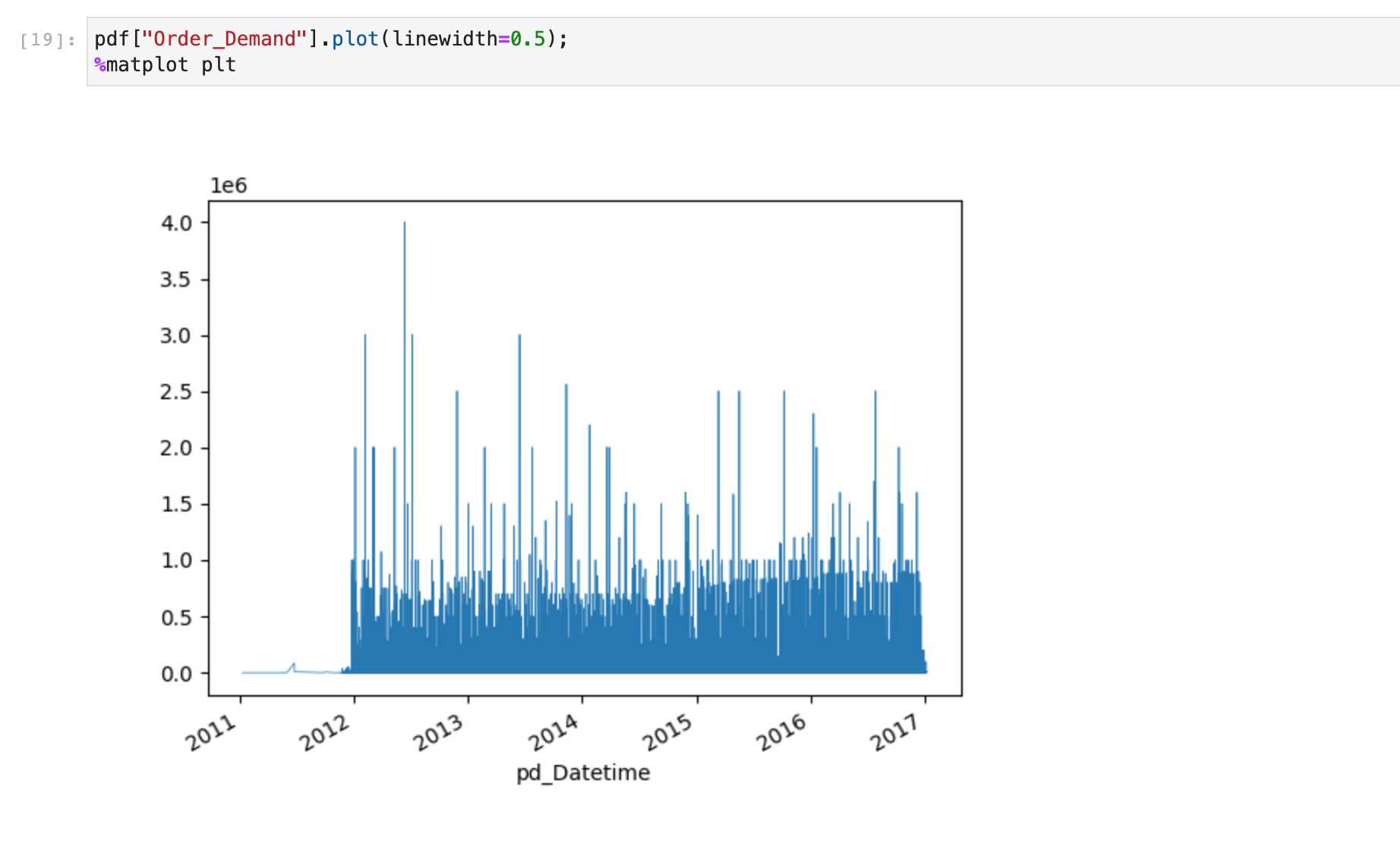

This dataset includes historical product demand by products and warehouses between 2011 and 2017. I loaded data into Incorta, and use the Incorta API to read the data.

I first did data profiling to summarize the data. I found the data can be categorized by product code, warehouse, and product category. I plan to find the time series based on different product categories and warehouses.

It was quite challenging to use pandas time series related functions. I need to define the index on a DateTime field, but I can not directly use the date or timestamp field from Spark. Finally, It worked after I use a string field and cover it to date time with Pandas.

Comments

Post a Comment